Nuclear Fusion Breakthrough: A Long Time Coming

There is perhaps no better example of the double-edged sword then science; it can be the best of humanity, and it can just as easily be the worst. It explains why some of the greatest inventions, or their precursors, came about during wartime: scientists derived the first chemotherapeutic drug from mustard gas, a terrifying force on the battlefield. Arguably the quintessential embodiment of the Jekyll-and-Hyde nature of science, Fritz Haber invented ammonia synthesis, staving off an impending mass famine and consequently saving the lives of billions, but his work on chemical weapons also led to the development of Zyklon B, the fuel of the Nazi killing machines. The insidious power and ruthless efficiency of Nazi-Germany science became an increasing concern as the war drew on with no end in sight, and when two German scientists discovered nuclear fission, that tension reached a breaking point. After a series of breakthroughs pertinent to the development of a nuclear bomb, the US organized its famed Manhattan Project, with the help of Canada and the UK. In classic fashion, out of the brutal, nuclear annihilation of Hiroshima and Nagasaki, came the promising prospect of nuclear energy; for better or for worse, science, or – more accurately – mankind, has apparently embraced the adage “out of darkness, cometh light.”

Indeed, mid-20th century nuclear physics was dark, uncharted territory, though many zealous trailblazers emerged on the road to understanding. At the turn of the century, JJ Thomson’s discovery of the electron, using a cathode ray tube, revolutionized the concept of the atom. Likewise, Ernest Rutherford’s gold foil experiment and subsequent announcement of the nucleus, the small, dense center of the atom, brought the study of atoms to a whole new level. These two discoveries set the stage for new inroads into the atomic world, though the first mention of nuclear fusion would come from a completely different realm of science – astrology.

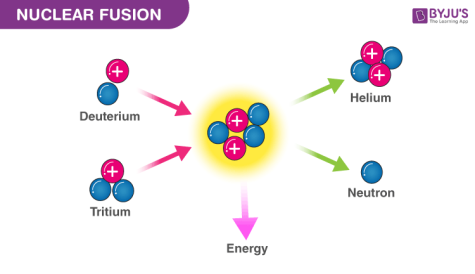

Nuclear fusion’s insidious origins are not its only paradoxical aspect; despite existing in the very smallest of confines – the atom, it was first observed in the very largest– the stars. A solar observer, British physicist Sir Arthur Eddington theorized that what would soon be called nuclear fusion, the bonding of many small atoms to create a larger one, was at the root of the unrelenting specks of brilliance that dotted the night sky. His work was based on the findings of fellow countryman and pioneer of mass spectrometry, Francis Aston, who noted that the mass of four hydrogen atoms was slightly more than that of a helium atom. Thus, Aston correctly presumed that if one fused four hydrogen atoms together to create a helium atom, there would be leftover mass. If mass was lost in the fusion, then, according to Einstein’s e=mc2 – which was relatively unknown at the time – the fusion of the atoms would instantaneously release considerable energy, and, if the reaction was sustained, perpetually release energy. Eddington’s work would have no meaningful real-life application for quite a while, due to the temperatures required for the fusion reaction to take place, which was millions of degrees. However, his discovery would resurface later on in the century, as nuclear science took a more sinister, fatal turn.

This turning point was due in part to Australian physicist Sir Mark Oliphant, who catalyzed the development of the Manhattan Project. His rise to scientific relevance was rather serendipitous; it was only after a speech by Sir Ernest Rutherford, the man who discovered the nucleus, that he decided to pursue a career in nuclear physics. At the Cavendish Laboratory, where Rutherford worked, Oliphant carried out the first experimental demonstration of nuclear fusion; he also discovered tritium, one of the two hydrogen isotopes that are the fuel of the fusion reaction. In the early days of the Cold War, the U.S would detonate the first fusion bomb, “Ivy Mike,” using a deuterium fuel, which utilized the same concept as a tritium fuel later would. The power of fusion was undeniable, but how to harness it was another question – one that proved much more difficult to answer.

This turning point was due in part to Australian physicist Sir Mark Oliphant, who catalyzed the development of the Manhattan Project. His rise to scientific relevance was rather serendipitous; it was only after a speech by Sir Ernest Rutherford, the man who discovered the nucleus, that he decided to pursue a career in nuclear physics. At the Cavendish Laboratory, where Rutherford worked, Oliphant carried out the first experimental demonstration of nuclear fusion; he also discovered tritium, one of the two hydrogen isotopes that are the fuel of the fusion reaction. In the early days of the Cold War, the U.S would detonate the first fusion bomb, “Ivy Mike,” using a deuterium fuel, which utilized the same concept as a tritium fuel later would. The power of fusion was undeniable, but how to harness it was another question – one that proved much more difficult to answer.

The subsequent seven decades of research and development proved to be riddled with problems, incurred by poor decision making. Scientists were set on the Tokamak device, which, simply put, utilizes magnetic fields to contain the fusion reaction inside of it. Consequently, other designs and ideas were not given as much thought or funding, despite Tokamak’s many issues. It was only in recent years that the countless other concepts of catalyzers for nuclear fusion have  begun to gain recognition.

begun to gain recognition.

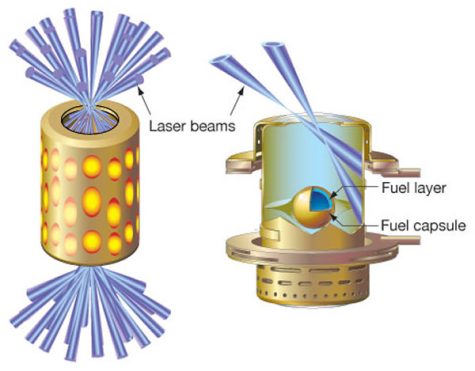

These new possibilities have already opened the floodgates to significant progress. Most recently an NIF (National Ignition Facility) team at LLNL (Lawrence Livermore National Laboratory) made an incredible step forwards. Using the world renowned laser system at the NIF, the team utilized “inertial confinement fusion,” which utilizes high pressures and temperatures to create a fusion reaction and achieve scientific breakeven, meaning that the energy of the reaction was equal to that of the lasers. In fact, the reaction, which yielded 3.15 MJ, produced over 100% more energy than the lasers that catalyzed it, which required 2.05 million joules. In a field so plagued by false hope, this is a satisfying implementation of the theoretical for nuclear energy. This breakthrough certainly harbors hope for the future, as the world, and the U.S in particular, steers away from fossil fuels. Nuclear energy promises an energy alternative in the race to prevent the planet from drifting further into the tumultuous waters of climate change, and after so many decades of learning and theorizing, it may finally become a reality.

Paolo, a current senior, is looking forward to his third year writing for the Banner, this time as a section editor. Outside of the Banner, Paolo is the...