Journalism has reinvented itself a number of times. Just as the natural life cycle replaces the old with the new, journalists have adapted their print to radio, their radio to television, their television to the internet, and now they have to navigate through the risks and benefits posed by artificial intelligence. Generative AI, new and widely available, allows for the generation and compilation of content. It will have profound impacts on journalism, and will affect the function of different occupations as well as the cultural context within the employment and advancement tracks.

As an aspiring journalist myself, it is important to understand how AI might change the opportunities that will be available in coming years. When the opportunity presented itself to shadow Tamala Edwards, a widely respected journalist and broadcast news anchor for 6ABC Philadelphia, I accepted without hesitation. I had the privilege to gain insight into the emerging influence of AI in the journalism industry and its impact in many spheres.

The focus for a lot of these changes would be generative AI resources; this term refers to technology that can create new content based on information, trained on large language models. Essentially just a powerful statistical media model or highly sophisticated autocomplete, Chat-GPT and DALL-E are among various AI tools that are starting to be implemented in different parts of news production. At its current state, generative AI is being used by many publications to do work behind the scenes, computing data, or even creating bylines for published articles. Despite its growing usefulness, this tool can still fall short in certain situations, limiting its working potential somewhat for the time being. Issues of accuracy for many reporters prove to be a significant roadblock for a full integration of artificial intelligence tools, as there is a statistical chance of uncertainty due to the construction of these AI systems, and some generative AI systems can fabricate “facts” in a phenomenon that has come to be called “hallucinations.” For instance, when asked for references to support a story, some generative AIs have been found guilty of making up articles that sound real and are attributed to real people, but were entirely hallucinated by the AI. This highlights the ongoing need for experienced and competent human fact checking as part of the process.

Within the scope of AI in print news, Jennifer Heller, a WPVI 6ABC Action News Producer, spoke at length about her interactions with AI and its influence with her role at a broadcast news channel. She recounted an article that had been produced by AP News, excitedly pointing out the hand that AI had in its production. The headline read, “Phillies bring 7-game win streak into game against the Reds” and in fine print after the piece was a notice that said, “The Associated Press created this story using technology provided by Data Skrive and data from Sportradar.” This article exhibits one of the baseline roles that AI plays in the synthesis of the news. Heller commented on the marvel of this implication in the journalism field, “It’s interesting to see how AI puts together news stories, you wouldn’t necessarily put this directly on air but it’s pretty close to it.” This tool marks the fluctuating operations within the occupation since artificial intelligence has gained footing in daily life.

Heller also recounted the changes that she has seen firsthand in her industry: “There used to be so many more people in the control room, but technology simplified it. We always talk about which position is gonna go next because we don’t need it anymore with technology.” As she gave me an informal tour of the 6ABC studio, we walked past a large vibrant room that was littered with flashing lights and luminous switches. The predominantly digital room had three editors seated, operating the graphics and the news timing. Heller communicated to me that this was the control room, one of the most heavily AI impacted sections of the studio. This memory reminded Heller about a time when “[a]n editor said to [her], ‘my job’s already starting to disappear but they can’t take away your job because you’re a writer’ and ever since he said that [she’s] started to realize that’s not true anymore. To some degree it seems like it’s [only] a matter of time.”

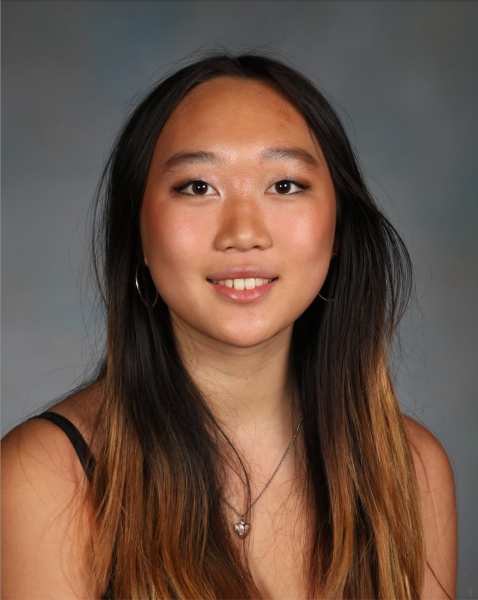

Tamala Edwards reiterated this shared sentiment of “it’s a matter of time” when we spoke, seated across from each other at a slim gray conference table and she offered a wealth of perspective. The decorated 6ABC Action news anchor had been wearing a beaming yellow blazer over a white blouse that matched her ornate necklace adorned with gold and white metal flowers. “Computers are smart, they’ll figure it out.” said Edwards, the statement encompassing something that we should all adopt moving forward. “Is AI going to be able to discern between the context and importance of different stories? Just because it’s true, is it gonna tell the right kind of story?” These questions are essential to the navigation of public perception and how that will play out in an era of AI-influenced news reporting. Edwards and I discussed how a robotic news anchor may affect a polarized audience. “The danger being if people continue to feel as though the media’s biased, there may be a space for them to be convinced that the robot is more fair” said Edwards, however she recounted the observations that, “robots are programmed by people and so I think we have had a number of gatekeepers or people who have been raising warnings about AI, whether it’s facial recognition, the way it scans things on the web, the way it composes things, so the biases of human beings show up but [what makes this dangerous in part is that] people will think well it’s gotta be fair because it’s a robot.” Though it poses an unsettling picture of the future, the threat of this blind allegiance with a so-called “impartial” robot is likely a distance away because of the nuance and context that AI is not privy to. There are fundamental aspects of humanity that a computer won’t be able to replicate; Edwards mentioned how even if they get the right information, that’s not all it takes to tell a story. “If i tell you a story, the point isn’t a fairytale story that you’ve heard a million times, it’s the emotion. What impact is it supposed to have? That’s a big part of telling the news, especially with features. The more I can tell you about various people, the more it conveys emotion.” A computer can try to replicate this appeal to human emotion but its only technique is to copy from existing sources, not tackling fresh events or new intersectional context. “The computer can interpret fact, but can it evoke emotion, can it write a story that makes you sit back and say, “Oh My God. A computer can do the graphics in a movie, it can generate various scenes, but can it figure out how to compel you to feel something after you leave the theater or turn off the TV?” said Edwards, her voice unwavering, secure in her take.

I asked Edwards if she had any thoughts on the fact that in the future, viewers may be able to select the racial profile of a reporter, if they are AI generated. She responded confidently, and explained in terms of her days as a war reporter, “You turn on the BBC and the African reporter was in Greece, the Portuguese reporter was in Seoul, the Arab reporter was in Patagonia, they clearly just pick people to do stories, and I was amazed at how quickly I stopped caring about what the reporter was, and instead about what the story was.” There does not seem to be much correlation between race and the news they report, she continued to say, “How silly in America that we’re so hung up on what somebody is when they get to do the news. And the good thing is that we’ve moved a certain distance away from that, I feel as though you can pick any background and they’re telling all kinds of stories.” A lament for the future though, is that so much of our society has changed because of what people want to see and what happens when the people start to pick the bots? This uncertainty describes an unavoidable topic that is bound to have lasting effects if AI becomes advanced enough to take over as an anchor during news broadcasts, however at its current state, this will not be an immediate worry in the near future. Another consideration is that generative AIs are trained on large language models which have intrinsic biases based on who is generating the content so how AIs generate news reporting may in fact be influenced in ways that are not always obvious. AIs use algorithms that cater to the preferences of users, so there is a risk that they may also skew the selection of stories based on those algorithms and further the polarization of readers. If readers can ask to see only favorable news stories about a particular political candidate or a spin on a government’s approach to legislation, they may only solidify their preconceptions. Seasoned journalists seeking to be objective may serve to be better curators of the news for readers and viewers.

Currently, the field of journalism could still feel the heat of generative AI. Edwards and I speculated on the fact that this may be costly for those entering the industry. Edwards considered that if publications are looking to use the tool they may use it for basic stories that could save them a salary and benefits for a starting employee, likely a young person who is beginning their career in journalism. The question is, where does that lead? Where is the line going to be?

Edwards mused about the sacredness of starting your career in journalism, “It’s a question about what the future is of our industry. Every reporter has a story of where they started, I was an intern, I was a desk assistant, I was a go-fer, and I got somebody to let me do a liveshot, I got someone to let me go cover a city council meeting, somebody let me go to a high school basketball game that no one wanted to cover, and over time you start to put together a body of work, a tape, to move up the ladder.” We both wondered how you create a body of work to convey that we need another generation in this industry and that you should be part of this generation? An outcome of this added level of competition with generative AI is essentially an inevitable increase in contesting between starting employees. However, this may just ensure better reporters since there will be more precise decision making with the added selectiveness in employment.

This does not suggest a surrender to the apprehensive mindset that many have adopted on the topic. Instead, it promotes a further education on how generative AI really works so that its implementation can be as smooth and positive as possible, while certain negative repercussions are inevitable. Edwards and Heller both gave valuable insights on the prospective impacts of generative AI based on their past experience and predictions for the future. It will be crucial for relevant skills, growing prospective journalists competence in managing this AI, along with the indispensable ability to draw in media consumers with engrossing human interest and investive stories. The industry is welcoming a hybridized and clearly uncertain future and the only option for those involved is to adapt to changing times.